What is Apache Spark?

Apache Spark is one of the most sultry new patterns in the innovation space. It is the structure with likely the most noteworthy potential to understand the product of the marriage between Big Data and Machine Learning. Apache Spark empowers organizations to measure and break down streaming information that can emerge out of numerous information sources, for example, sensors, web, and versatile applications. The intuitive examination enables to run specially appointed inquiries across information put away at a huge number of hubs and rapidly return investigation results. Apache Spark is an open-source, universally useful, disseminated bunch figuring framework that gives quicker examination than Apache Hadoop. Apache Spark is a quick, broadly useful bunch figuring framework. This ground-breaking item is uncommonly intended for enormous scope information preparing and unraveling different information examination errands. Sparkgives natives to in-memory group figuring. Acceptance of Apache Spark as the de-facto big data analytics engine endures increasing.

These days, Spark is being embraced by the accompanying scene players: Alibaba, Yahoo, Apple, Google, Facebook, and Netflix.

Apache Spark counseling firms empower organizations to measure and investigate streaming information that can emerge out of numerous information sources, for example, sensors, web, and portable applications. Therefore, organizations can investigate both constant and chronicled information, which can assist them with recognizing business openings, distinguish dangers, battle misrepresentation, cultivate preventive support and perform other pertinent assignments to deal with their business. these specialists have involved involvement in Big Data advances

Apache sparkis simple to utilize interface combined with in-memory include helps information experts to investigate information in a quicker manner. It empowers information investigators to take a shot at information streaming and SQL or AI. Because of its element of tending to the inadequacies of Hadoop, it has made an imprint in the realm of Big Data. So learning Apache Spark is fundamental for your vocation development. Since Spark and Hadoop work in an unexpected way, numerous organizations want to enlist competitors who are knowledgeable with both.

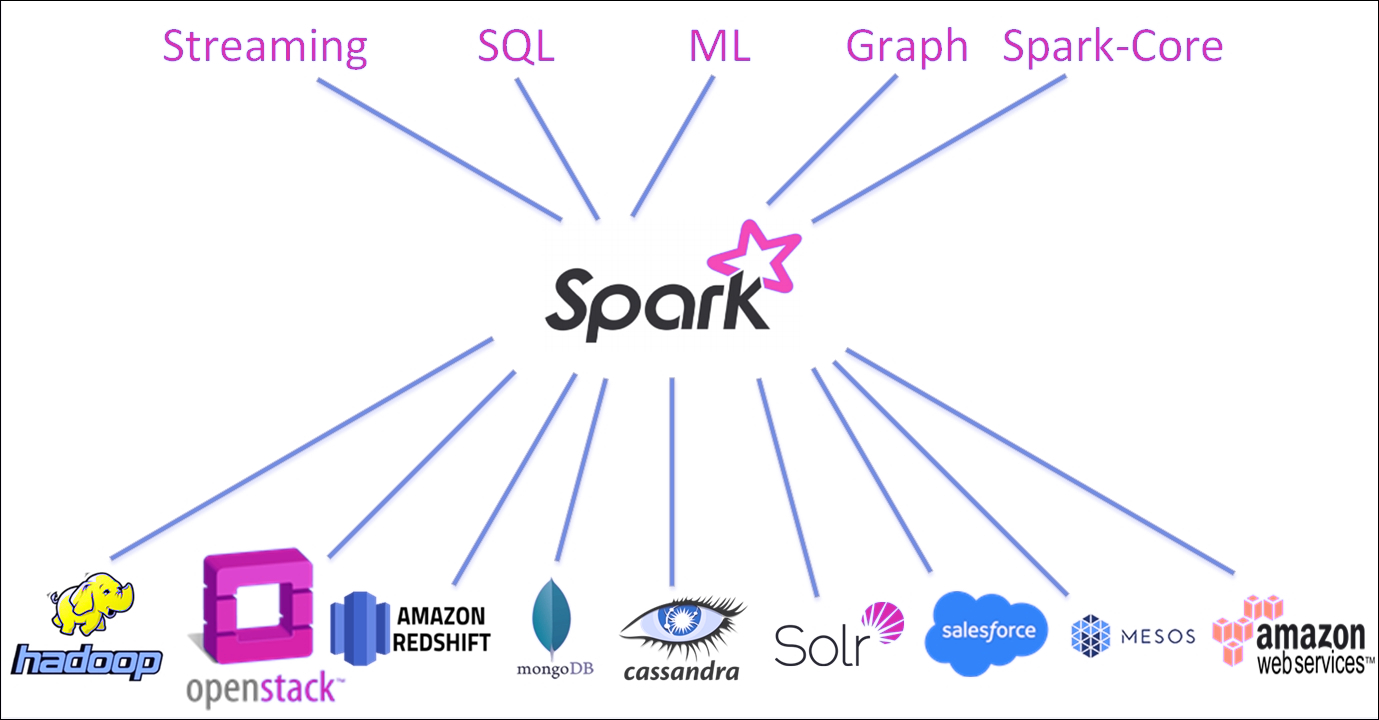

Here is a portion of the segments of Apache Spark you should think about:

- SparkCore – The Spark center comprises of the essential functionalities of Spark, for example, memory the board, flaw recuperation, task booking, and then some. Spark center additionally gives numerous APIs to information control and information preparation.

- Flash Streaming – Spark Streaming cycles live floods of information or ongoing information. This information, by and large, comprises framework log documents, messages via online media, and so forth

- Spark SQL – Spark measures Big Data, yet can handle organized information also. This is finished utilizing Spark SQL.

- MLlib: Spark gives different Machine Learning calculations like relapse, arrangement, and grouping, which help in preparing Big Data.

- GraphX: To streamline diagram investigative issues, Spark gives a library which helps in, controlling charts and empowering chart equal calculation. Apache Spark provisions deep learning from Deep Learning Channels.

The advantages of utilizing Spark include:

The enormous scope of information handling:

- Designed to be circulated

- Built for huge scope straight versatility

- Creates and consolidates enormous conveyed informational indexes with a solitary line of cryptograph

Open source climate that gives:

- Many capacities, libraries, and administrators

- Many commitments from the network

- API combination with Java, Scala, Python, and R

- Easily works on assorted information that dwells in HDFS – Hadoop Distributed File method

- Can run as an independent application, on Hadoop and Mesos, or in the cloud.

Significant level administrators are all the more handily improved:

- Old-style equal handling programs are mind-boggling, troublesome, and tedious to gauge

- MapReduce needs information semantics, creating it tough to enhance execution

- The interest for continuous information handling is expanding and Spark’s Streaming API can oversee and examine huge volumes of information progressively.

Ease of Use:

- Apache Spark comes with a simple to use APIs for functioning on huge data. It provides more than 80 functioning workers which can further design good apps

Need to work with elite specialists on these innovations? Given our history of effective tasks, Apache spark developers India firms are exceptionally situated to give an exceptional yield on the venture. They offer adaptable plans where you could employ the specialists full-time, hourly, or contract-to-enlist.