This tech-led world offers you an abundance of raw data on a daily basis. The best thing to do is to utilize this raw data and transform it into value-driven insights that can help in better decision-making. If you aren’t able to transform your data into important insights, you will fall far behind your competitors. For this, the data engineering process is the most important thing for any business. This is a vital process that businesses belonging to any industry must follow. Be it manufacturing, retail, healthcare, education, or any other industry, data engineering is a must-needed process in your enterprise.

Processing and harnessing complex data streams can be a daunting and error-prone task. Hence, you must have the right set of professionals and tools to perform this task. Azure Data Engineering offers a cloud-based infrastructure to help you manage all your data with ease and peace of mind. This blog aims to help you get detailed insights about data engineering, its important components, some amazing technologies, Azure Data Engineering, real-life use cases, and much more.

What is Data Engineering?

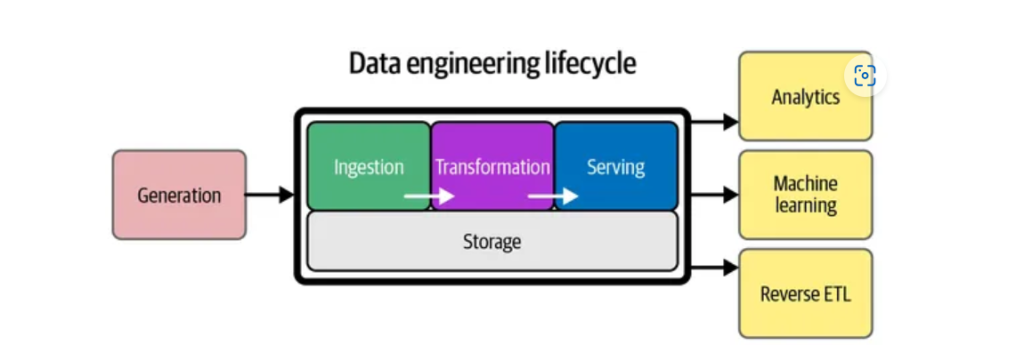

Data Engineering is a robust process of handling your data. It means designing, building, and managing the entire infrastructure of your business. This enables organizations to store, process, and analyze huge amounts of data. Data engineers can then build pipelines. These pipelines can transform raw data from different sources into clean and structured datasets. These can be used by data scientists and analysts to gain value-added insights.

Core Components of Data Engineering

Data engineering is a critical process that includes collecting, transforming, and optimizing raw data into useful formats. There are various tools and technologies available in the market. But its core components remain the same. And they are the pillar of any successful data engineering strategy. The core components of Data Engineering include:

- Data Collection

Data collection is at the center of any data engineering system. This means collecting data from various sources, be it internal or external data. It may include different data sources like APIs, databases, social media platforms, sensors, log files, APIs, and 3rd party providers.

It is difficult to collect data from various different sources. You can get data in unstructured format like raw text or JSON files. You can also receive data from structured formats like SQL databases. Data engineers must make sure that all the important and relevant data is collected.

Key Technologies

- Database Management Systems (DBMS): MySQL, PostgreSQL, and Microsoft SQL Server.

- API Integration: RESTful services, webhooks, and third-party API connectors.

- Message Queues: Apache Kafka and RabbitMQ. They help to capture real-time data streams from sensors and apps.

- Data Ingestion

After the data is collected, it must be ingested into a central system to process it. It includes processing data into a data platform or storage system that can seamlessly manage structured and unstructured data. Data can be processed in two ways:

- Batch Processing: In this way, a large amount of data is collected and ingested at certain intervals hourly or daily.

- Real-Time or Streaming: In this way, the data is ingested the moment it becomes available. Hence, it allows real-time insights and analytics.

From the two mentioned above, batch ingestion is most common for enterprises who don’t have urgency of their data. But, for customer engagement and fraud detection purposes, real-time ingestion is important.

Key Technologies

- Batch Processing: Apache Sqoop, Azure Data Factory.

- Real-Time Processing: Apache Kafka, Azure Stream Analytics, Amazon Kinesis.

- Data Storage

After the data is ingested, it should be stored in a system that is able to handle huge amounts of data. This data must be accessible for further analysis. Data engineers choose storage solutions based on volume, variety, and analysis. Three main types of storage systems include: Three main types of storage systems include:

- Relational Databases: With the help of DQL databases, structured data is stored in a predefined schema. It is the best for transactional data.

- Data Lakes: Here, massive volumes of semi-structured and unstructured data are stored. Data lakes are best for storing raw data, which can be refined later on.

- Data Warehouses: These are highly structured storage systems. It contains all the processes and curated data. They are optimized for query performance and accurate reporting.

Key Technologies

- Relational Databases: Microsoft SQL Server, MySQL, PostgreSQL.

- Data Lakes: Azure Data Lake, AWS S3.

- Data Warehouses: Snowflake, Google Big Query, Azure Synapse Analytics.

- Data Processing and Transformation

Raw data cannot be used for analysis. Data engineers must process and transform this data to get proper insights. It consists of tasks like data filtering, joining, cleansing, and aggregating. So, this is where you need Extract, Transform, Load (ETL) or Extract, Load, Transform (ELT) pipelines. These pipelines help to extract data from the source systems and transform it as per business logic. Then, it is loaded into the storage system for further analysis.

Key Technologies

- ETL Tools: Talend, Apache NiFi, Azure Data Factory.

- Distributed Processing: Apache Spark, Hadoop, Databricks.

- Data Pipeline Automation

Data pipelines have automated workflows that integrate various different stages of data processing. This includes data from ingestion to transformation to storage. After that, automation is required to ensure that these pipelines run without the need of any manual intervention. This is beneficial, especially in situations when you need to ingest and process data in real time.

A robust data pipeline will reduce downtime and eradicate errors. This empowers organizations to manage continuous data flow with zero or minimum disruption.

Key Technologies

- Orchestration Tools: Apache Airflow, Azure Data Factory, Prefect.

- CI/CD for Data: Jenkins, GitLab CI/CD integrated with data pipeline tools.

- Data Security

Today, data privacy laws like GDPR and CCPA have become more strict. Hence, data security is the most important for your organization. Data engineers must ensure that a company’s sensitive data is protected throughout its entire lifecycle. Data engineers must include robust security measures in all the data-related processes like collection or storage, etc. Some of the key security practices include Data Encryption, Access Control (RBAC and IAM), and Data Masking and Anonymization (PII).

Key Technologies

- Encryption Tools: Azure Key Vault, AWS KMS, OpenSSL.

- Access Control: Azure Active Directory, AWS IAM.

- Data Masking Tools: IBM InfoSphere Optim, Informatica Data Masking.

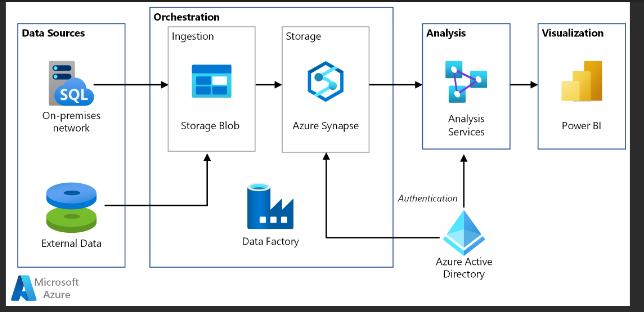

The Role of Azure in Data Engineering

Azure Data Engineering is the best solution for your business when you want to implement cloud-based data engineering. It integrated a lot of Microsoft Azure Services. This includes Azure Data Factory, Azure Databricks, Azure SQL Database, and Azure Synapse Analytics. All of Azure’s integrated services can help to streamline the data lifecycle.

Key Azure Services for Data Engineering

| Service | Functionality | Use Cases |

| Azure Data Factory | It is a cloud-based ETL service. It automates the data movement and transformation across different sources. | – Automate Data Workflows

– Hybrid Data Pipelines |

| Azure Databricks | It is a collaborative platform built on Apache Spark. It is specifically optimized for big data analytics and machine learning (ML). | – Real-time Analytics

– Machine Learning. |

| Azure Synapse | Azure Synapse is an integrated analytics service. It connects big data and data warehousing. | – Enterprise Data Warehousing

– Business Intelligence |

| Azure SQL Database | It is a fully managed relational database. It helps to build highly scalable cloud apps. | – Transactional Systems

– Data Lakes & Data Warehousing |

| Azure Blob Storage | It helps to store massive amounts of unstructured data like binary data or text. | – Data Lakes, Backup

– Archival Solutions |

Benefits of Using Azure for Data Engineering

- Seamless Integration: Azure tools are deeply integrated with each other. Hence, it enables you to get smooth data flow across different services.

- High Scalability: One of the other reasons enterprises choose Azure is its high-end scalability. It can manage large volumes of data as your business grows.

- Great Security: It has advanced encryption and compliance functionalities. Hence, Azure offers the highest levels of security and data privacy.

- Cost–Friendly: Azure has a dedicated Azure Cost Management Tool. This allows businesses to optimize their resource utilization. They just have to pay prices for what they actually use.

How to Build a Robust Data Pipeline with Azure?

If you want to know the power of Azure Data Engineering, have a look at this guide. Below are some simple steps to building a cutting-edge data pipeline with the help of Azure services

- Step 1: Data Ingestion

The first step is to collect data from various sources. With the help of Azure Data Factory, enterprises can connect to cloud sources, on-premises databases, and 3rd party APIs.

For instance, a retail enterprise can collect data from different systems like ERP, CRM, and their eCommerce website.

- Step 2: Data Storage

After ingesting the data, it is stored in Azure Data Lake Storage or Azure Blob Storage. It is a scalable storage solution that enables you to store structured and unstructured data.

For instance, the retail company can store both – Transactional data from sales, inventory, etc. And customer data like purchase history, preferences, etc., in the lake.

- Step 3: Data Transformation

After the data is stored, the third is to clean, transform, and prepare that data for further analysis. For this step, Azure Databricks is the best platform. It enables real-time data processing and advanced transformation with the help of Apache Spark.

For instance, the retail company can then transform sales data. This can be done by cleaning invalid entries. You can aggregate metrics by region. You can also calculate customer lifetime value.

- Step 4: Data Analytics and Reporting

Then, this transformed data is moved to Azure Synapse Analytics. Azure Synapse enables organizations to run complex queries. It also helps to build ML models and create reports with the help of Power BI.

For instance, the retail company can create dashboards to keep an eye on regional sales performance. They can also monitor customer behavior trends, real-time inventory levels, and much more.

- Step 5: Data Security and Compliance

The last and most important step is to ensure that your data engineers use Azure’s built-in security features throughout the process. The features like access control, encryption, and network security can help to protect sensitive business information.

How Data Engineering Drives Business Success for Different Industries?

The Use Cases

Enterprises across different industries are implementing data engineering to boost their growth and accelerate efficiency. Here are some industry-specific applications of data engineering.

| Industry | Use Cases |

| Manufacturing | Predictive maintenance, supply chain optimization, and quality control. Analyze real-time sensor and production data. |

| Retail | Personalized marketing, inventory forecasting, and real-time demand analysis. This can be done by integrating sales and customer data. |

| Healthcare | Optimize patient care, minimize operational costs, and ensure regulatory compliance. This can be done by analyzing medical records and operational data. |

| Finance | Fraud detection, credit risk analysis, and automated reporting. This can be done by processing real-time transactional data. |

| Education | Track student performance, optimize resource allocation, and enhance administrative tasks. This can be done by analyzing academic and operational data. |

Conclusion

Data Engineering is the pillar of driving success with the help of valuable data assets. Without data engineering, enterprises would struggle to use the vast amount of raw data that is generated on a daily basis. It helps to optimally use data at the right place and at the right time to drive better business growth. By implementing robust data pipelines, enterprises can transform raw data into value-driven insights. Drive smarter and better decision-making with the help of best-in-class Azure Data Engineering. It allows organizations to utilize secure, scalable, and top-notch tools to harness the full potential of data.

Are you looking to modernize your data infrastructure? Explore DynaTech’s Advanced Data Engineering Services. Need more help? Our experts can help you take your data strategy to the next level. Connect with our professionals today!